Discover the best operating systems for Kubernetes clusters, including popular Linux distributions, container-optimized OS, and Windows Server. Learn their benefits and make an informed choice for

Learn how to deploy NGINX on Kubernetes with our comprehensive step-by-step guide. Enhance your application deployment with scalable and reliable solutions, complete with YAML examples, advanced configurations, and monitoring tips. Perfect for developers and sysadmins alike.

In today’s fast-paced tech world, deploying applications efficiently is crucial. Kubernetes, an open-source platform for automating deployment, scaling, and operations of application containers, makes this process seamless. NGINX, a high-performance HTTP server and reverse proxy, is a popular choice for load balancing and serving static content. This guide will walk you through deploying NGINX on Kubernetes, ensuring you have a scalable and reliable solution.

Kubernetes provides a robust infrastructure for deploying applications in containers. It simplifies scaling, management, and monitoring. NGINX, known for its speed and reliability, complements Kubernetes perfectly by efficiently handling HTTP requests and acting as a load balancer.

Benefits of Using Kubernetes |

Benefits of Using NGINX |

Before we dive into deploying NGINX on Kubernetes, ensure you have the following:

kubectl command-line tool installed and configured.Step 1: Create a Namespace |

Namespaces in Kubernetes help in organizing resources. First, create a namespace for your NGINX deployment.

kubectl create namespace nginx-deployment

namespace/nginx-deployment created

Step 2: Deploy NGINX |

Create a deployment YAML file for NGINX.

# nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Apply this deployment to your Kubernetes cluster.

kubectl apply -f nginx-deployment.yaml

deployment.apps/nginx-deployment created

Step 3: Expose NGINX Service |

To make NGINX accessible, create a service to expose the deployment.

# nginx-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

namespace: nginx-deployment

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

Apply this service definition.

kubectl apply -f nginx-service.yaml

service/nginx-service created

Step 4: Verify Deployment |

Check the status of your deployment and service.

kubectl get deployments -n nginx-deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 3/3 3 3 32s

kubectl get services -n nginx-deployment

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-service LoadBalancer 10.97.81.189 <pending> 80:30378/TCP 103s

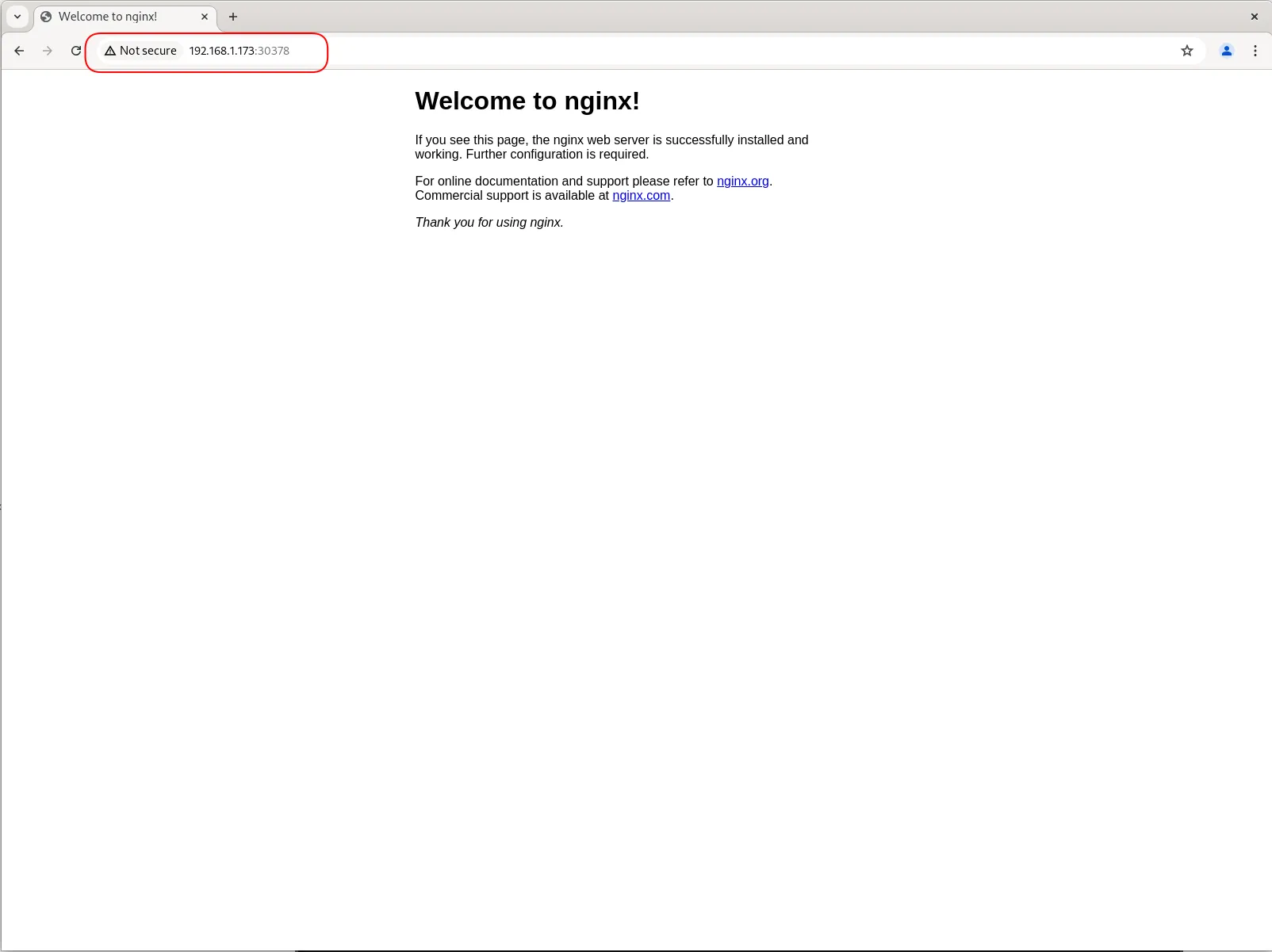

You should see the NGINX pods running and the service exposing the deployment. Also,You can verify the web page is operational by entering the node IP and port in your browser, like this: http://<node-ip>:30378.

Photo by admingeek from Infotechys

Kubernetes makes scaling straightforward. To scale the NGINX deployment to 5 replicas, run:

kubectl scale deployment/nginx-deployment --replicas=5 -n nginx-deployment

deployment.apps/nginx-deployment scaled

Verify the scaling:

kubectl get deployments -n nginx-deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 5/5 5 5 8m52s

For more advanced scenarios, consider configuring an Ingress controller. Ingress in Kubernetes manages external access to services, typically HTTP.

Create an Ingress Resource |

First, install an Ingress controller (e.g., NGINX Ingress Controller).

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/cloud/deploy.yaml

Next, create an Ingress resource.

# nginx-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

namespace: nginx-deployment

spec:

rules:

- host: nginx.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service

port:

number: 80

Apply the Ingress resource.

kubectl apply -f nginx-ingress.yaml

Verify Ingress |

Ensure your DNS is configured to point to the Ingress controller’s IP address. Verify the Ingress rules.

kubectl get ingress -n nginx-deployment

Monitoring and logging are crucial for maintaining the health of your NGINX deployment. Kubernetes offers several tools for this purpose.

Prometheus and Grafana |

Prometheus is a monitoring system and time series database. Grafana is an analytics platform for monitoring systems. Install them using Helm.

helm install prometheus prometheus-community/prometheus

helm install grafana grafana/grafana

ELK Stack |

The ELK (Elasticsearch, Logstash, and Kibana) stack provides powerful logging capabilities.

helm install elasticsearch elastic/elasticsearch

helm install logstash elastic/logstash

helm install kibana elastic/kibana

Deploying NGINX on Kubernetes combines the power of two robust technologies, ensuring high performance, scalability, and resilience. By following this guide, you can set up and manage a scalable NGINX deployment on Kubernetes. For advanced needs, consider exploring Ingress controllers and integrating monitoring and logging solutions.

Deploying applications has never been easier with Kubernetes and NGINX. Whether you’re a developer looking to streamline your deployment process or a sysadmin seeking robust solutions, this combination is a powerful tool in your arsenal.

Did you find this article useful? Your feedback is invaluable to us! Please feel free to share your thoughts in the comments section below.

Related Posts

Discover the best operating systems for Kubernetes clusters, including popular Linux distributions, container-optimized OS, and Windows Server. Learn their benefits and make an informed choice for

In this guide, we’ll walk you through the process of deploying WordPress on Kubernetes, leveraging its benefits to ensure your website runs smoothly and efficiently.

Are you looking to set up a Kubernetes development environment with Vagrant quickly and efficiently? Look no further! In this guide, we’ll walk through how